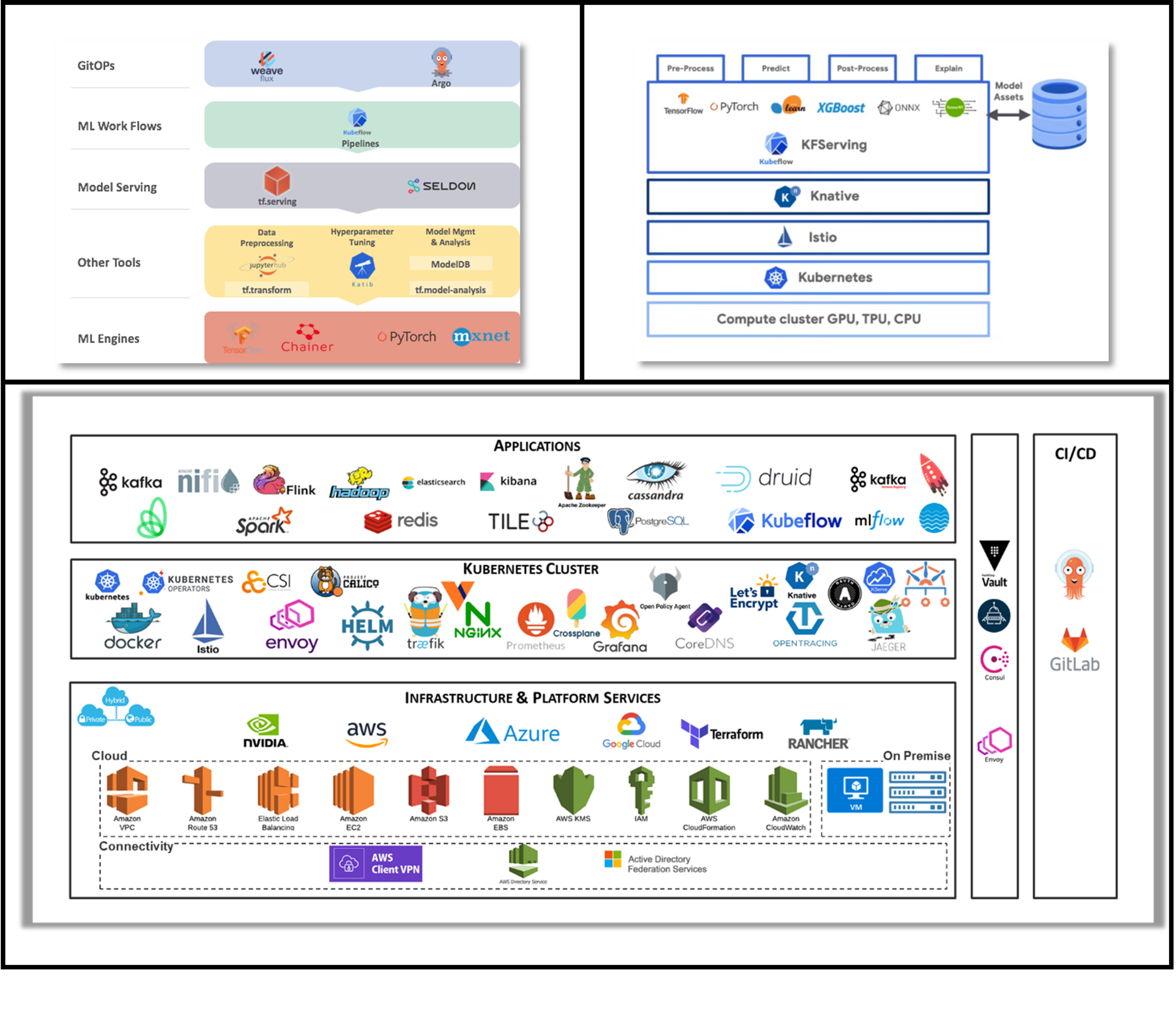

Full-featured and Integrated MLOps and GitOps Environment:

We deliver a hosted, or on-premise deployable, Machine Learning (ML) environment platform to facilitate the development, testing, and deployment of ML models. This provides a centralized and collaborative workspace for data scientists and ML engineers to experiment with different algorithms, datasets, and configurations to build accurate and efficient models. Key components of a host ML experimentation environment:

- Notebooks and IDEs: The environment offers interactive notebooks (e.g., Jupyter) and integrated development environments (IDEs) that allow users to write and execute ML code, visualize data, and document their experiments.

- Data Management: It provides tools to manage and preprocess datasets, ensuring they are easily accessible, version controlled, and reproducible across experiments.

- Experiment Tracking: The platform enables users to log and track their experiments’ parameters, metrics, and results, making it easier to compare different models and understand the performance of each.

- Model Versioning: Allows users to track the different versions of ML models and their associated code, facilitating reproducibility and collaboration among team members.

- Hyperparameter Tuning: The environment supports automated hyperparameter optimization, enabling users to efficiently explore the best combinations of model parameters.

- Model Serving and Deployment: It offers capabilities to deploy trained models into production, making them accessible for real-world inference.

- Collaboration and Sharing: The platform encourages collaboration by allowing users to share their notebooks, code, and experiments with colleagues, promoting knowledge sharing and teamwork.

- Experiment Reproducibility: The environment ensures that experiments can be reproduced by capturing the dependencies, configurations, and data used during each trial.

- Scalability: Designed to scale efficiently, enabling users to work with large datasets and complex ML models.

- Integration with ML Frameworks: The environment integrates with popular ML libraries and frameworks like TensorFlow, PyTorch, scikit-learn, etc., allowing users to leverage their preferred tools.

- Resource Management: The platform optimizes the allocation of computational resources, such as GPUs or TPUs, to efficiently run experiments and minimize execution time.

- Model Monitoring and Debugging: Provides tools for monitoring the performance of deployed models in production and debugging potential issues.